In my company (Traxens), I had to justify why I want to use Python AND Spark (Scala). Indeed, the cost of 2 different clusters (one with Datastax DSE Cassandra for doing Cql / Solr requests from Python for Proofs of Concepts/Proofs of values, one with DSE Spark for using Spark SQL) looks expensive.

In addition, we have TABLEAU(r) for giving BI/Analytics reports to end users. And TABLEAU needs SIMBA Spark Sql driver for connecting to Cassandra. Then, we need a Spark cluster with Thritf enabled in it in order to allow to TABLEAU to read data & KPI in our Cassandra table.

So, some arguments in order to reply to the questions: why do not develop only in Scala/Spark?

Useful discussions below:

https://www.kdnuggets.com/2016/01/python-data-science-pandas-spark-dataframe-differences.html

http://blog.cloudera.com/blog/2014/03/why-apache-spark-is-a-crossover-hit-for-data-scientists/

- This quick tutorial on ML in PySpark by Data Bricks is a pretty good walk-through, and doesn't tread into the proprietary DB-only classes: MLlib and Machine Learning — Databricks Documentation

- This free video course by Eric Charles is pretty nice, including many examples in Scala, Python and R, all executed within Zeppelin. Some of my favorite chapters are:

- Manipulating data frames in Zeppelin, in videos 2.6 and 2.7 (featuring Scala and SQL)

- An introduction to visualizing data in Zeppelin, using ggplot2, matplotlib and Angular.js

- Videos 2.10 and beyond introduce Spark ML and the critical pipeline concept

- Although we will generally prefer the scalable libraries of Spark ML, this introduction to ML with scikit-learn is a nice introduction to the topic in Python.

https://www.linkedin.com/pulse/java-python-r-scala-many-languages-big-data-speaks-debajani/

http://www.apachespark.in/blog/scala-python-java-to-learn-spark/

https://www.quora.com/Is-Scala-a-better-choice-than-Python-for-Apache-Spark-in-terms-of-performance-learning-curve-and-ease-of-use

Some people will cite performance as a reason to choose Scala over Python when using Spark. I'm going to tell you to put your concerns about speed aside. Everyone in the world knows that compiled languages are faster than interpreted. It isn't a debate worth having any more. It is more valuable to consider what is important to you. Do people in your company know Scala and Python equally well? Then use Scala. Do people know Python better than Scala? Then use Python. Here is an article I wrote on Using Python with Apache Spark

The reason I suggest Python in this case is that it is much more important to be productive than to figure out the intricacies of a new language. If you were told that your code is going to run 10% slower, but you're going to get your code written 3-10x faster because you are not learning how the new language works I'm pretty sure the tradeoff is clear.

Building a data science team

To be considered as well the availability of developers on the market: important if you need to hire developers.

Already complicated for Java developers but could be a nightmare if we need to find coders for maintaining Scala code....We will need to train them to Scala but they will be ready for maintaining Python apps..

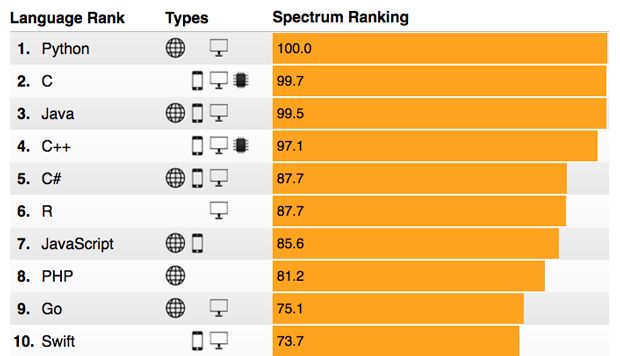

https://spectrum.ieee.org/computing/software/the-2017-top-programming-languages

https://insights.stackoverflow.com/survey/2017

Comments

Post a Comment